Dear Friends,

http://www.kurzweilai.net/ibm-reveals-five-innovations-that-will-change-our-lives-within-five-years

Be Well.

David

http://www.kurzweilai.net/ibm-reveals-five-innovations-that-will-change-our-lives-within-five-years

Be Well.

David

IBM reveals five innovations that will change our lives within five years

The era of cognitive systems: when computers will, in their own way, see, smell, touch, taste and hear

December 18, 2012

IBM announced today the seventh annual “IBM 5 in 5”

— a list of innovations that have the potential to change the way

people work, live and interact during the next five years, based on

market and societal trends as well as emerging technologies from IBM’s R&D labs. This one is focused on cognitive systems.

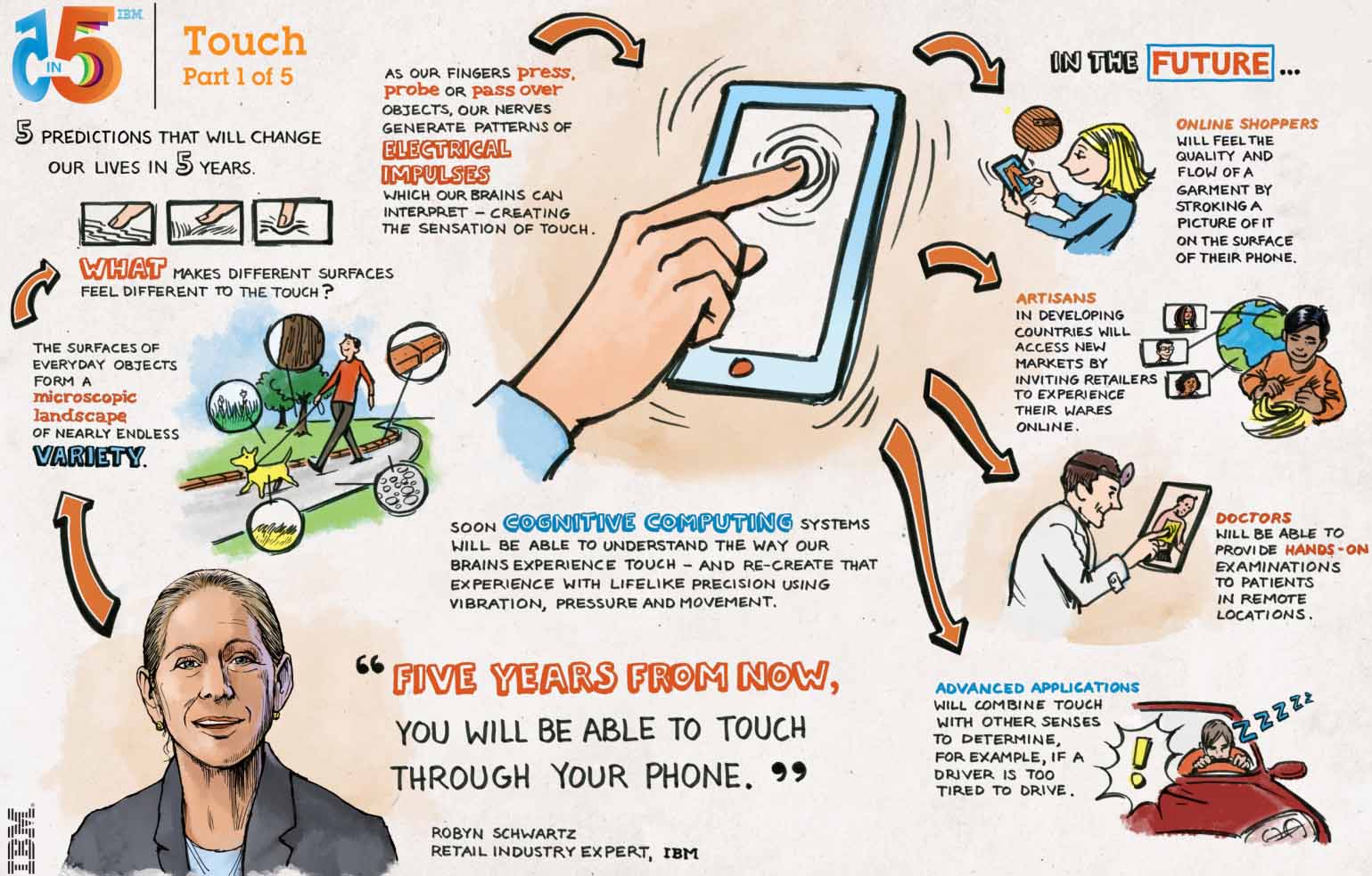

In the next five years, industries such as retail will be transformed

by the ability to “touch” a product through your mobile device, using

haptic, infrared and pressure-sensitive technologies to simulate touch —

such as the texture and weave of a fabric as a shopper brushes their

finger over the image of the item on a device screen.

Each object will have a unique set of vibration patterns that

represents the touch experience: short fast patterns, or longer and

stronger sets of vibrations. The vibration pattern will differentiate

silk from linen or cotton, helping simulate the physical sensation of

actually touching the material.

Current uses of haptic and graphic technology in the gaming industry, for example, will take the end user into a simulated environment.

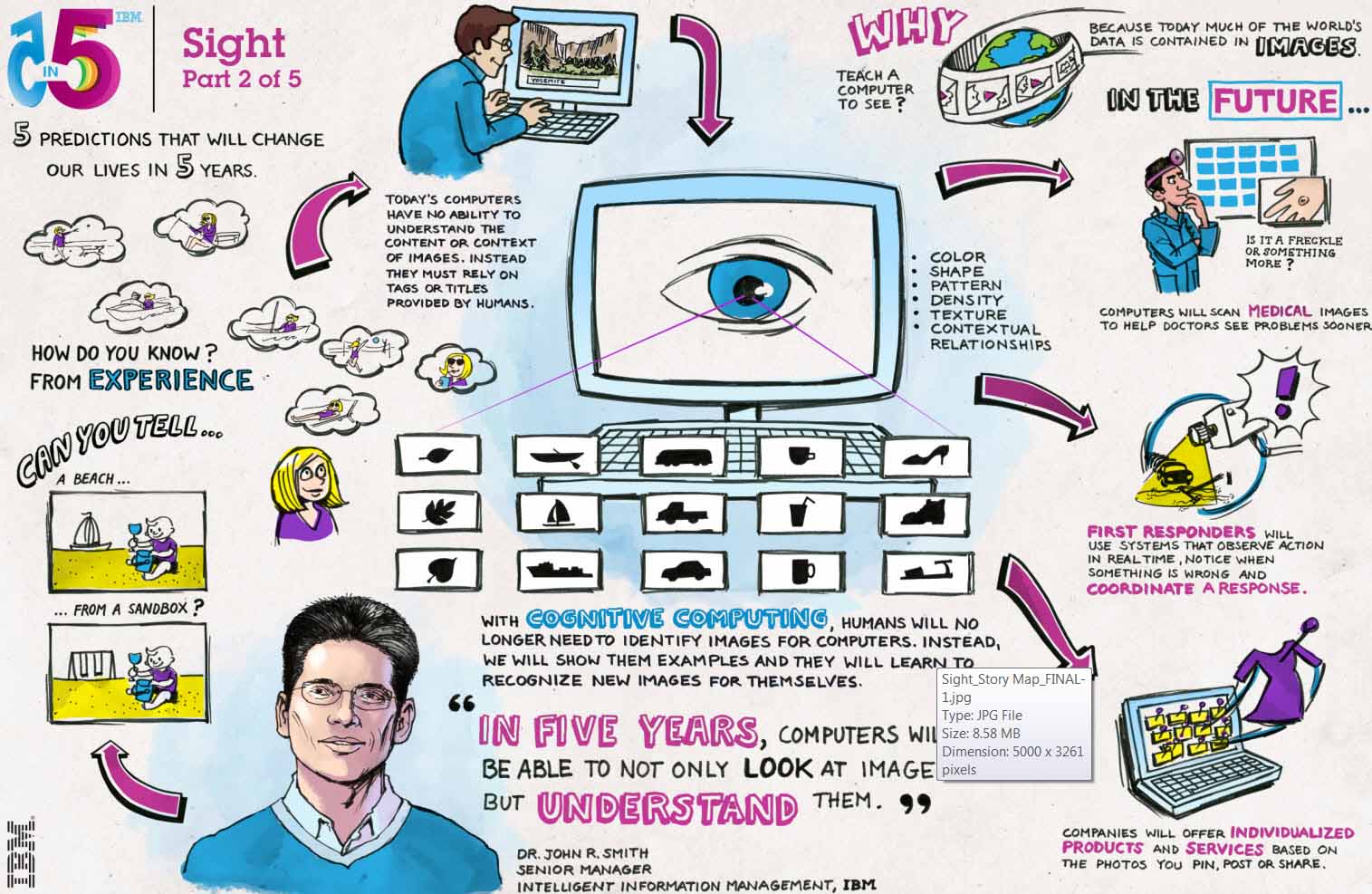

We take 500 billion photos a year[1]. 72 hours of video is uploaded to YouTube every minute[2]. The global medical diagnostic imaging market is expected to grow to $26.6 billion by 2016[3].

In the next five years, “brain-like” capabilities will let computers

analyze features in visual media such as color, texture patterns, or

edge information and extract insights. This will have a profound impact

for industries such as healthcare, retail and agriculture.

These capabilities will be put to work in healthcare by making sense

out of massive volumes of medical information, such as MRIs, CT scans,

X-Rays and ultrasound images, to capture information tailored to

particular anatomy or pathologies. By being trained to discriminate what

to look for in images — such as differentiating healthy from diseased

tissue — and correlating that with patient records and scientific

literature, systems that can “see” will help doctors detect medical

problems with far greater speed and accuracy.

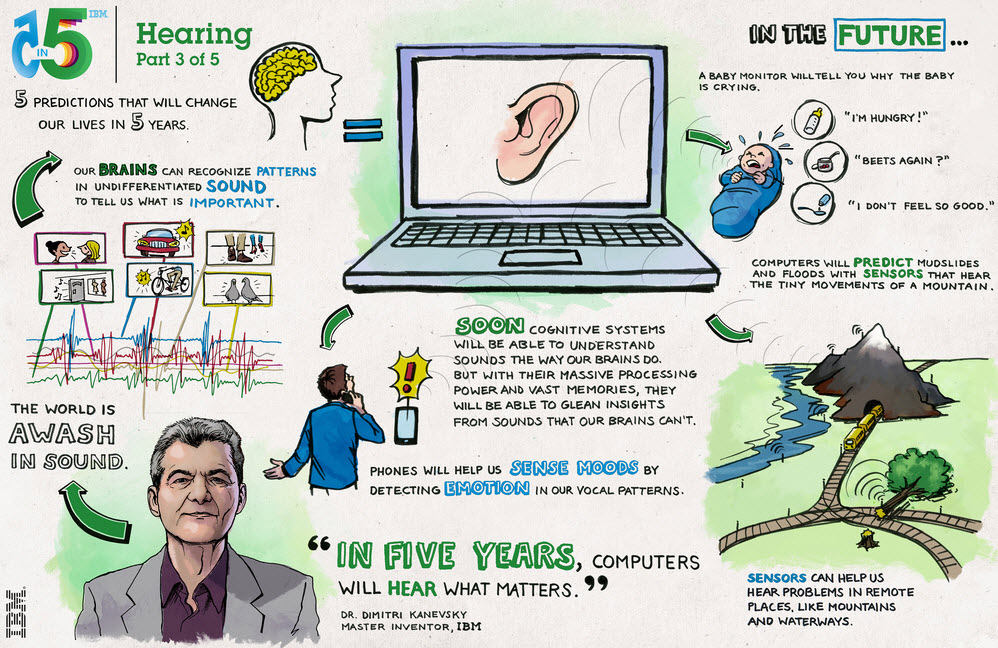

Within five years, a distributed system of clever sensors will detect elements of sound such as sound pressure, vibrations and sound waves

at different frequencies. It will interpret these inputs to predict

when trees will fall in a forest or when a landslide is imminent. Such a

system will “listen” to our surroundings and measure movements, or the

stress in a material, to warn us if danger lies ahead.

Raw sounds will be detected by sensors, much like the human brain. A

system that receives this data will take into account other modalities,

such as visual or tactile information, and classify and interpret the

sounds based on what it has learned. When new sounds are detected, the

system will form conclusions based on previous knowledge and the ability

to recognize patterns.

For example, “baby talk” will be understood as a language, telling

parents or doctors what infants are trying to communicate. Sounds can be

a trigger for interpreting a baby’s behavior or needs. By being taught

what baby sounds mean — whether fussing indicates a baby is hungry, hot,

tired or in pain — a sophisticated speech recognition system would

correlate sounds and babbles with other sensory or physiological

information such as heart rate, pulse and temperature.

In the next five years, by learning about emotion and being able to

sense mood, systems will pinpoint aspects of a conversation and analyze

pitch, tone and hesitancy to help us have more productive dialogues that

could improve customer call center interactions, or allow us to

seamlessly interact with different cultures.

For example, today, IBM scientists are beginning to capture underwater noise levels in Galway Bay, Ireland

to understand the sounds and vibrations of wave energy conversion

machines, and the impact on sea life, by using underwater sensors that

capture sound waves and transmit them to a receiving system to be

analyzed.\

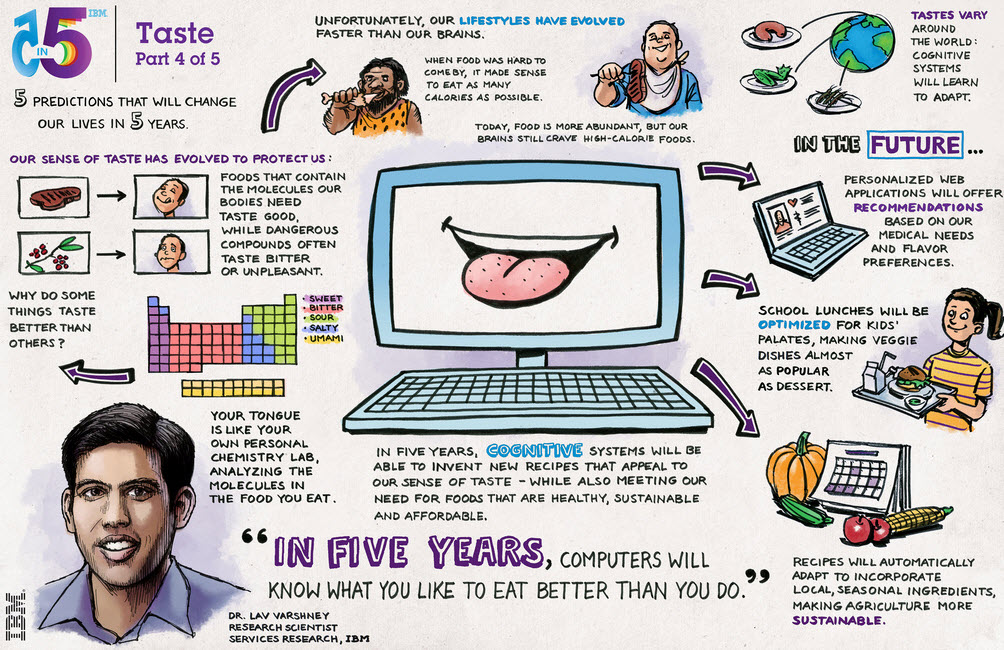

What if we could make healthy foods taste delicious using a different kind of computing system that is built for creativity?

IBM researchers are developing a computing system that detects

flavor, to be used with chefs to create the most tasty and novel

recipes. It will break down ingredients to their molecular level and

blend the chemistry of food compounds with the psychology behind what

flavors and smells humans prefer. By comparing this with millions of

recipes, the system will be able to create new flavor combinations that

pair, for example, roasted chestnuts with other foods such as cooked

beetroot, fresh caviar, and dry-cured ham.

A system like this can also be used to help us eat healthier,

creating novel flavor combinations that will make us crave a vegetable

casserole instead of potato chips.

The computer will be able to use algorithms to determine the precise

chemical structure of food and why people like certain tastes. These

algorithms will examine how chemicals interact with each other, the

molecular complexity of flavor compounds and their bonding structure,

and use that information, together with models of perception to predict

the taste appeal of flavors.

Not only will it make healthy foods more palatable — it will also

surprise us with unusual pairings of foods actually designed to maximize

our experience of taste and flavor. In the case of people with special

dietary needs, such as individuals with diabetes, it would develop

flavors and recipes to keep their blood sugar regulated, but satisfy

their sweet tooth.

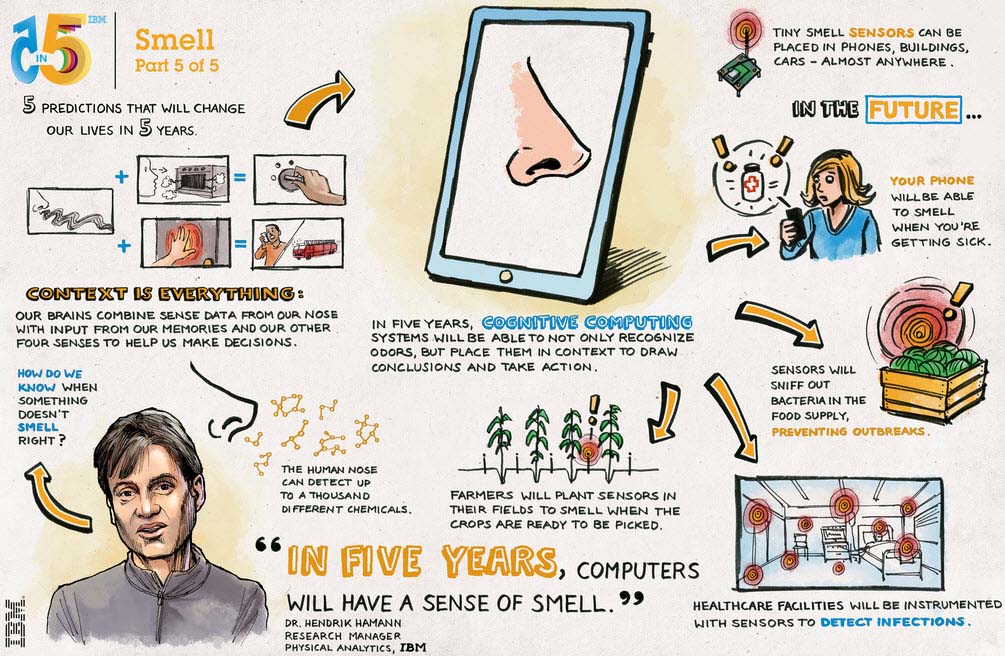

During the next five years, tiny sensors embedded in your computer or

cell phone will detect if you’re coming down with a cold or other

illness. By analyzing odors, biomarkers and thousands of molecules in

someone’s breath, doctors will have help diagnosing and monitoring the

onset of ailments such as liver and kidney disorders, asthma, diabetes

and epilepsy by detecting which odors are normal and which are not.

In the next five years, IBM technology will “smell” surfaces for

disinfectants to determine whether rooms have been sanitized. Using

novel wireless “mesh” networks, data on various chemicals will be

gathered and measured by sensors, and continuously learn and adapt to

new smells over time.

Due to advances in sensor and communication technologies in

combination with deep learning systems, sensors can measure data in

places never thought possible. For example, computer systems can be used

in agriculture to “smell” or analyze the soil condition of crops. In

urban environments, this technology will be used to monitor issues with

refuge, sanitation and pollution — helping city agencies spot potential

problems before they get out of hand.

Today IBM scientists are already sensing environmental conditions and gases to preserve works of art. This innovation is beginning to be applied to tackle clinical hygiene, one of the biggest challenges in healthcare today.

Antibiotic-resistant bacteria such as Methicillin-resistant

Staphylococcus aureus (MRSA), which in 2005 was associated with almost

19,000 hospital stay-related deaths in the United States, is commonly

found on the skin and can be easily transmitted wherever people are in

close contact. One way of fighting MRSA exposure in healthcare

institutions is by ensuring medical staff follow clinical hygiene

guidelines.

No comments:

Post a Comment